|

I am an ELLIS Ph.D. student at INSAIT where I am advised by Prof. Luc Van Gool and currently a Machine Learning Researcher Intern at Netflix. From May to November 2024, I was a Student Researcher at Google DeepMind in Toronto, working with Robert Geirhos. Before my PhD journey began, I was a visiting researcher from 2021 to 2023 at CMU's Human Sensing Lab working with the amazing Fernando De La Torre. I also spent 7 wonderful years at the University of Toronto's Computer Science department where I earned my HBSc and MS degrees. Email / CV / Google Scholar / Twitter / Github |

|

|

I'm broadly interested in Generative Vision models for content creation, and currently focused on Video synthesis. My research aims to gain a better understanding of how to enable user-intuitive control over Generative models. I am also interested in bias mitigation and harnessing the power of large vision and language models by adapting them to solve personalized tasks using limited data. Relevant work is highlighted here.

|

|

Saman Motamed, Minghao Chen, Luc Van Gool, Iro Laina arXiv, 2025 code / arxiv A recipe to make video language models better understand physics, and a rigorous benchmark to test VLMs on physics understanding. |

|

Saman Motamed, Laura Culp, Kevin Swersky, Priyank Jaini, Robert Geirhos WACV, 2026 code / arxiv A benchmark of real videos for testing physics understanding of generative video models. |

|

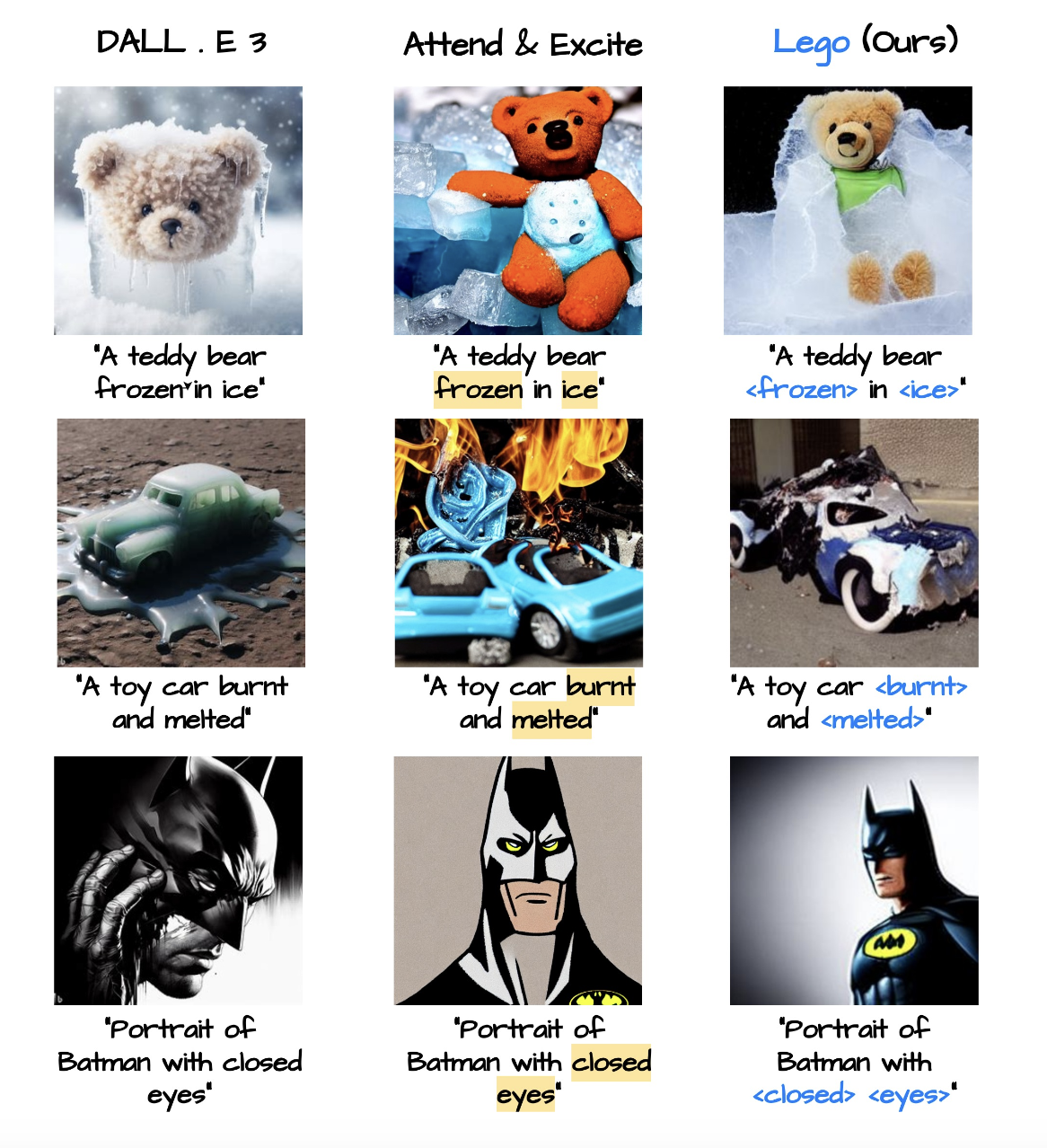

Saman Motamed, Danda Pani Paudel, Luc Van Gool ECCV, 2024 code / arxiv A method for textual inversion of adjectives and verbs in text-to-image diffusion models. |

|

Saman Motamed, Wouter Van Gansbeke, Luc Van Gool CVPR Generative Models Workshop, 2024 code / arxiv Zero-shot control over object shape, position and movement in text-to-video models via cross-attention maps. |

|

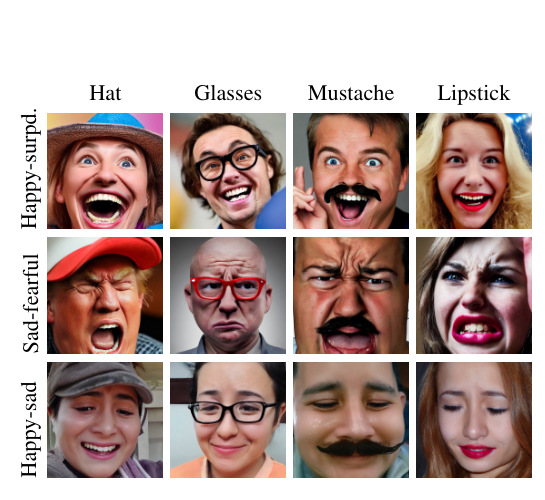

Reni Paskaleva, Mykyta Holubakha, Andela Ilic, Saman Motamed, Luc Van Gool, Danda Paudel CVPR, 2024 arxiv Fine-grained generation of expressions in conjunction with other textual inputs and offers a new label space for emotions at the same time. |

|

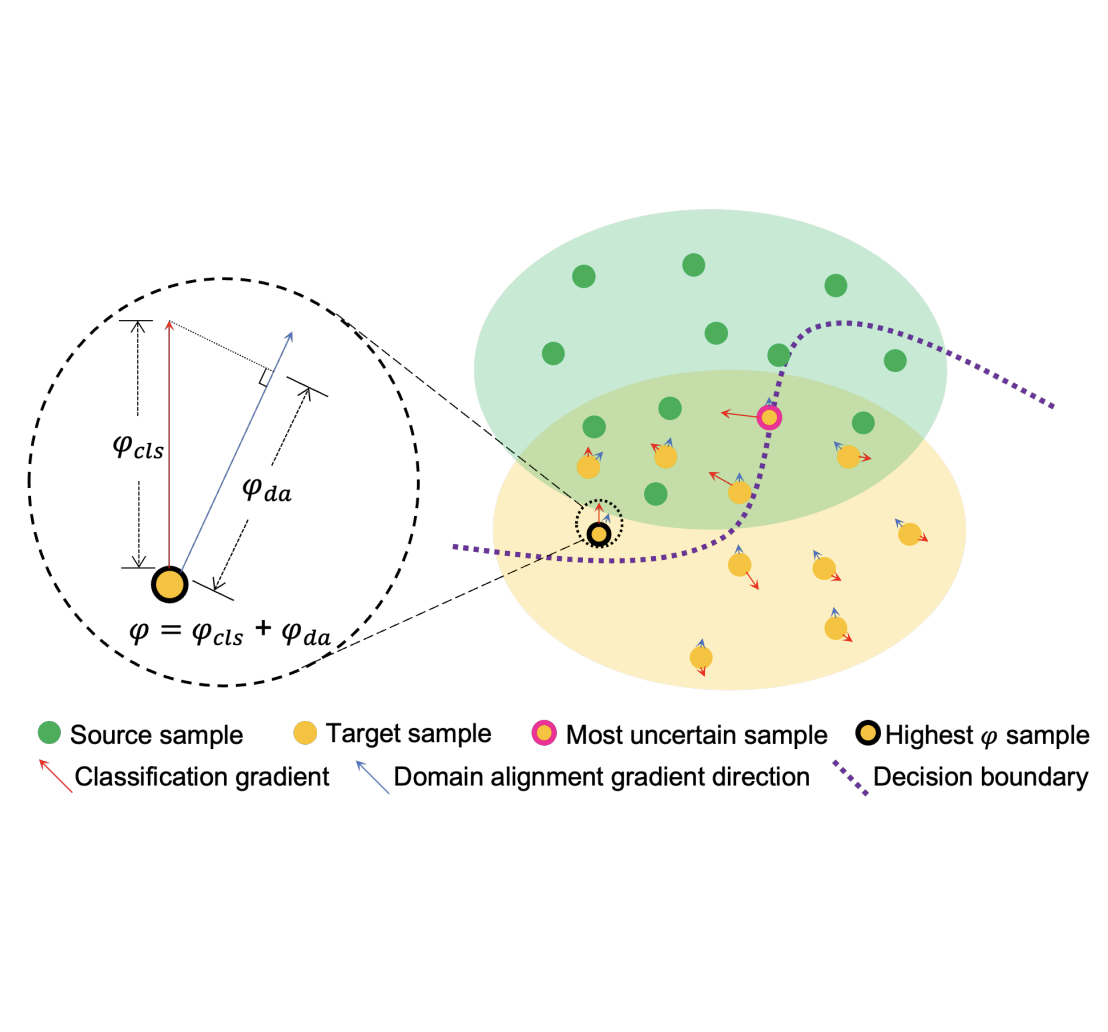

Lin Zhang, Linghan Xu, Saman Motamed, Shayok Chakraborty, Fernando De la Torre WACV, 2024 arxiv A Multi-Target Active Domain Adaptation (MT-ADA) framework for image classification. |

|

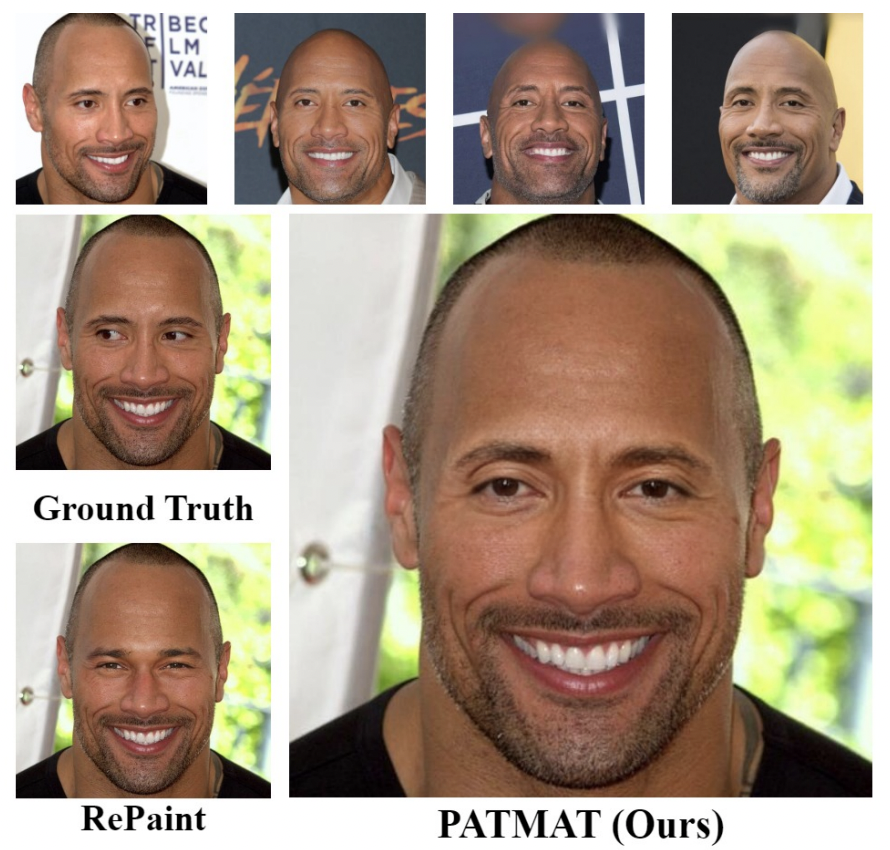

Jianjin Xu, Saman Motamed, Praneetha Vaddamanu, Chen Henry Wu, Christian Haene, Jean-Charles Bazin, Fernando De la Torre WACV, 2024 code / arxiv Fast, identity preserving face inpainting with diffusion models. |

|

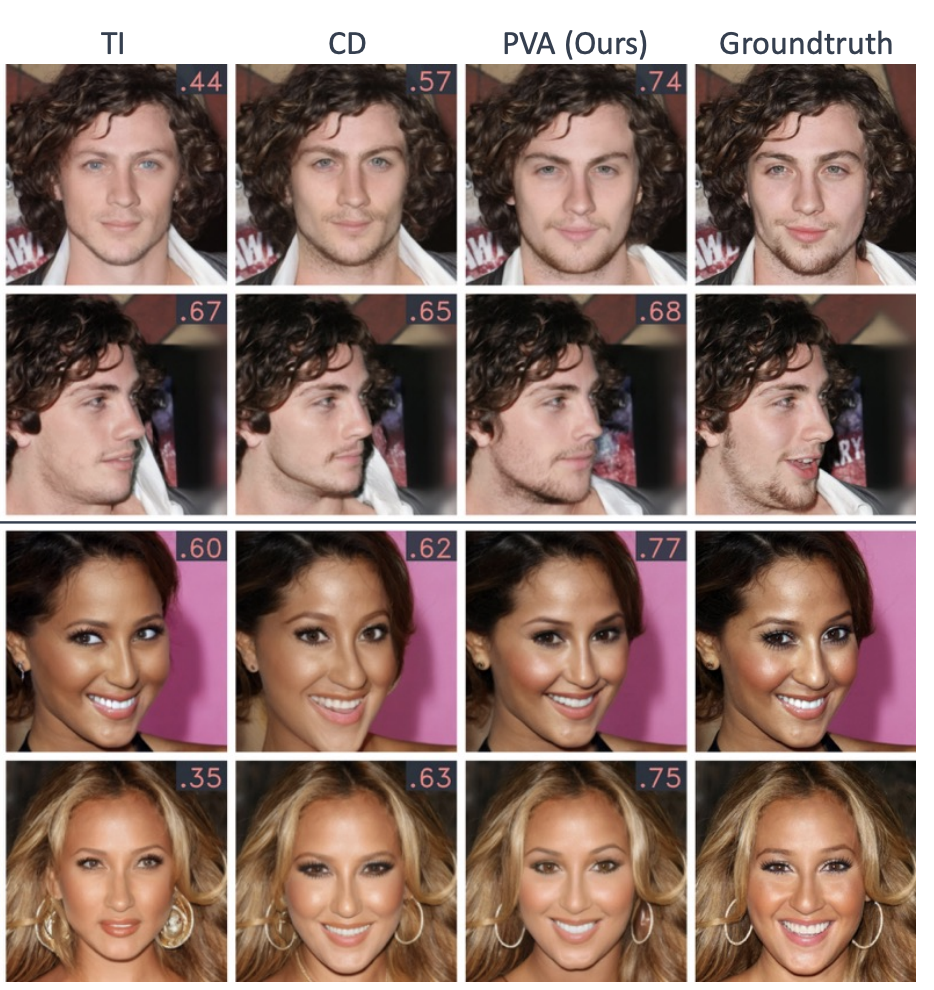

Saman Motamed, Jianjin Xu, Chen Henry Wu, Fernando De la Torre ICCV, 2023 ICCV / code / arxiv A tuning method for personalizing inpainting of the face and preserving the identity of a subject. |

|

Chen Henry Wu, Saman Motamed, Shaunak Srivastava, Fernando De La Torre NeurIPS, 2022 NeurIPS / code / arxiv A framework for defining control over latent-based generative models. |

|

Invited talks and presentations.

|

Somewhat related to computer vision and content creation, I enjoy film photography on 35 mm and medium format film. You can view some of my photos below.

|

|